2025 Predictions Thread (Part 1)

It’s worth mentioning why we should make predictions in the first place.

The human brain has an unfortunate quirk of rationalizing hypotheses in a backwards direction. Or, to put it more succinctly:

“I knew it all along.”

It’s an insidious little statement. Colloquially known as the “hindsight bias”, it refers to our tendency to overvalue past events and create false narratives of predictability.

Historical outcomes get distilled into oversimplified views. This leads not only to overconfidence, but also interferes with genuine learning.

Sam Altman has a blogpost from ~2015 illustrating how comically wrong even the brightest minds can be about technological predictions.

There is not the slightest indication that [nuclear energy] will ever be obtainable. It would mean that the atom would have to be shattered at will.

- Albert Einstein, 1932

Alas, we are not all doomed to become Reverse Cassandras.

Hindsight may cloud our judgement, but, as with all skills, improvements are possible with determined practice.

By constraining ourselves to forward-thinking, falsifiable predictions, we can at least measure progress (or lack thereof).

Here’s my attempt.

My series on 2025 Computer Science predictions will be divided into multiple parts, this is Part 1. More topics will be covered in subsequent posts.

For a more generalized video overview, you can watch my rambling here.

Table of Contents (Part 1)

1. Cryptography

2. RISC-V

3. Compression

Cryptography

Prediction:

70% Odds NIST publishes out new compliance standards mandating US Federal agencies (and their contractors) to switch to quantum-resistant algorithms for new systems.

Justification:

Current quantum computing factorization records are…kind of pathetic. The largest reliably factored number by Shor’s algorithm is 21.

More recently, in 2024, some researchers at the University of Trento managed to factor 8,219,999.

This was computed a D-Wave Pegasus. Kinda cool, but not a *real* quantum computer.

The distinction is actually quite important. D-Wave systems are quantum annealers, not gate-based quantum computers.

Train your eye to notice the difference. You’ll find yourself less swayed by “quantum” computing dribble in popular media.

On the other hand, the US Federal Government takes the quantum computing threat very seriously.

Symmetric encryption standards are relatively safe. Even a perfect implementation of my buddy Grover only reduces the brute-force complexity by half.

The NSA basically shrugged, recommended AES-256, and said “good enough”.

RSA isn’t so lucky.

Assuming a stable, error correcting quantum computer with a chonky number of qubits (thousands) running Shor’s Algorithm, it’s game over.

At the moment, “real” quantum computers (aka computers capable of running Shor’s) are significantly out of reach.

IBM has a 433-qubit system called Osprey, which you can also rent on their cloud platform for a chill $96 a minute. Who knew quantum computing was almost as easy as spinning up an EC2 box.

“But Laurie!” You exclaim. “That seems pretty close! If you only need thousands of qubits to break RSA, it could happen at any moment!”

Not really.

physical qubits != logical qubits

Error correcting qubits are needed to get any useful computing done. 433 physical qubits, with our current technology, only works out to a handful, at best, of logical qubits.

So why the fear in Federal Circles?

Y2K no longer permeates the nightmares of trepidatious Congresspersons. Nay, they dream of Y2Q.

In other words, cryptographic standards have to change significantly before theoretical attacks are remotely possible, due to “harvest now, decrypt later” surveillance strategies.

NIST produces the cybersecurity standards referenced by most federal agencies, although there are exceptions. A contest for the Post-Quantum Cryptography Standardization began in 2016, wrapping up in August 2024 with the winners.

CRYSTALS-Kyber is the winner for public-key encryption, so it’s likely to replace RSA in future contexts.

(note, not all of RSA’s functions are 1:1 replicated by Kyber; signatures are likely to be replaced with Dilithium)

The cyphers may be finalized, but there is much work to be done factoring in transition plans and official mandates. NIST published an initial public draft last November, but it is yet to be finalized.

(incoming rant about probabilites)

The current executive administration unexpectedly pulled the previous directive regarding memory-safe languages in a federal context, which I am now factoring into my odds. The White House previously published this on their site, which is now gone.

That being said, it’s not uncommon for administration changes to result in a complete redo of the Whitehouse.gov site, so this may be an administrative fluke.

Normally, I’d be wagering my bet at 85-90%, but the recent change of direction reduces my confidence.

70% Odds.

RISC-V

Prediction:

80% odds at least three major linux distributions will have official stable release channels for RISC-V architectures (e.g. fedora, ubuntu) that aren’t just experimental builds by the end of 2025.

Justification:

RISC-V may be the coolest ISA on the block, but at the moment, adoption by the linux community is limited.

Lurkers of distrowatch may (incorrectly) get the impression of Debian as the “old man” distribution from the relatively slow release cycle of the stable channel. Contrary to public perception, Debian is significantly ahead of the curve when it comes to RISC-V adoption.

Official support on the unstable Debian branch for riscv64 started 1.5 years ago, back in July of 2023. Most risc-v SBC enjoyers will tell you that; yup, 99% of the time the default recommended distribution is Debian unstable.

Arch, somewhat obviously, has had unofficial riscv64 releases for a while now. Fedora is still in the experimental stage (Rawhide builds only).

It’s pretty reasonable to expect official, stable releases in the near future. Debian 13 is TBA, but if historical trends are anything to go by, a summer 2025 riscv64 stable build is quite likely.

Ubuntu will soon follow, considering their upstream follows Debian, and they already have an “early” (limited) RISC-V port. Considering the competition, I would wager Fedora competes in this space soon after.

80% Odds.

Prediction:

20% Odds the Raspberry Pi Foundation releases an official primary SBC based on a RISC-V SoC (no mixed cores).

Justification:

This one is a bit tricky. Although my low estimate may seem misguided at first, it’s important to evaluate what the community wants versus what is logistically possible.

The Achilles heel of the Raspberry Pi Foundation has always been, and forever will be, supply chain shortages.

The industrial market caused an unexpected surge in demand starting in the mid-2010s, and it’s never been the same since.

What began as a noble effort to create low-cost, linux SBCs for the educational market, quickly ballooned into a commercial frenzy. The sheer convenience of the Raspberry Pi became its downfall. If it’s so easy to develop on…why not use the same board for production use?

Well, turns out there are a lot of reasons not to use SBCs in that environment, but everyone’s going to do it anyway. Welcome to the new normal.

The knock-on effects of this, of course, is that the Raspberry Pi foundation now has significantly less ability to experiment with radical design changes. They quite literally can’t afford a flop; they are a publicly traded company now after all.

In the early 2010s, the foundation probably could have gotten away with a full ISA shift. (risc-v of course was barley a blip on the radar during that period, but I digress…)

Now, not so much. RPI’s relationship with Broadcom is very strong, and Broadcom has a complete absence of public statements about RISC-V.

Current RISC-V SoC / CPU manufacturers have, to put it nicely, a lack of maturity. Xuantie and SiFive are the big names here, but they still come out with processors that have hardware bugs in the CPU itself.

Supply chain risk, lack of software package support, backwards compatibility risks, as well as hardware constraints make this an unlikely proposition.

20% Odds.

Compression

Prediction:

80% Odds at least 2 major streaming platforms switch to AV1 as the default codec for HD and UHD content on capable devices by the end of 2025.

Justification:

Screw H265. All my homies hate H265.

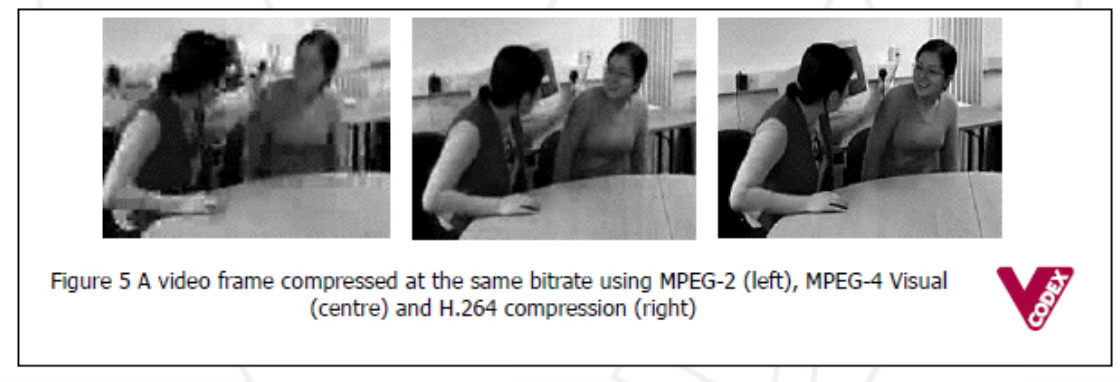

Video compression is always an interesting field of research. The potential savings in bandwidth costs alone are so high that there is strong justification for implementing more efficient standards.

It’s one of those rare events where technological advances are immediately noticeable on both sides of the equation; both the distributors (streaming platforms) and the end user.

In many computer science domains, the impact of an efficiency improvement is only translatable to the business side. Sure, things like the migration of Oracle to Aurora databases saved AWS nearly $100 million (rumored), but as an end user, the difference was imperceptible.

To understand the video compression landscape, we first have to talk about The Codec Wars.

On one side, we have the evil Royalists, defenders of the proprietary licensing model. Their forces consist of the MPEG LA and their allies.

On the other, we have the Free Streamers, proponents of royalty-free codecs. Their forces consist of the Alliance for Open Media and their sympathizers.

MPEG LA got a head start, standardizing their H.265 (HEVC) codec in 2013, but was rife with fragmented patent pools.

The tiered royalty structure made licensing fees unpredictable, and prohibitive to smaller company adoption.

You’re looking at annual minimum royalties of ~$100k a year!

That said, the previous success of H264 led hardware manufacturers to adopt HEVC decoding relatively quickly. Consumer devices broadly adopted decoders in the mid-2010s. The iPhone 6 started using H265 for FaceTime, and NVIDIA’s 900-series featured on-device decode as well.

AV1 (the Free Streamers), started as a consortium by various FAANG-companies as a next-generation, royalty free video compression alternative in 2015. Early implementations of the AV1 codec were significantly more computationally intensive, but optimizations continued to push the speed over time.

In the 2020s, hardware manufacturers started to adopt AV1 decode (Intel, NVIDIA, etc), making streaming adoption more viable.

Twitch was one of the more intriguing experimental adopters; AV1 shows about 30% better compression efficiency compared to H265 for the same quality. Due the nature of the platform essentially being a “sea of individual encoders” (streamers), royalty-free options are particularly relevant to their user base.

The savings on royalties alone represent such a massive potential cost savings for streaming platforms that my optimism remains high for the swift adoption of AV1 in the near future. Hardware decode is quickly reaching a critical mass for end user devices, it’s only a matter of time before everyone makes the switch.

80% Odds.

Prediction:

30% odds a major streaming platform announces a partnership with a particular TV, set top box, or hardware vendor (e.g. NVIDIA) to certify a device for a sort of “enhanced AI streaming”.

Justification:

Okay, this one seems weird, but hear me out.

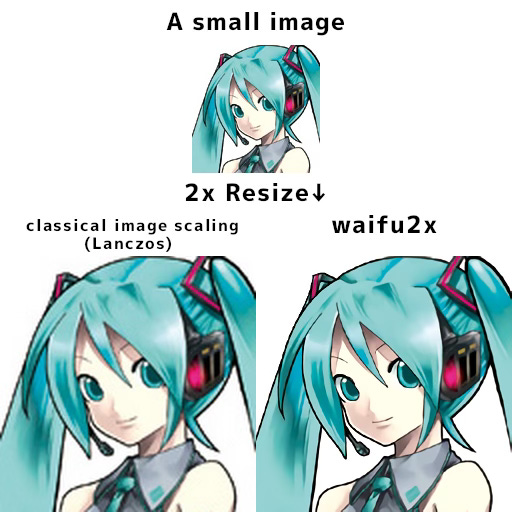

Anime upscalers have gotten ridiculously good.

Anime artwork, mostly due to the flat colors and sharp linework, responds particularly well to neural-net upscalers. Many series only exist in lower resolutions; never getting a proper “remastering” treatment, often from lack of popularity.

The desire of fans to view their favorite shows in upscaled resolutions, combined with the rapid progress in the effectiveness of the techniques, led to a snowball effect of improvement.

Don’t get me wrong, it’s still a *niche* subject. But the tech is already there.

Let me attempt to convince you another way.

In 2023, Microsoft released their “Video Super Resolution” as an optional feature to reduce compression artifacts of low-bitrate videos in Edge.

As of January 2025, it is still disabled by default, but the option is there. And it looks…not that bad actually.

It’s going to take some convincing before the general public wholeheartedly accepts neural video upscaling as a “default” feature, but the artifact reduction is quite impressive.

Much like how many consumer televisions implement frame generation for motion interpolation (gross), I wouldn’t be *that* surprised if this technique gets adopted more widely.

I place low odds currently because the hardware and implementations are still young, but then again, streaming platforms need new ways of differentiating between each other.

No one really has a leg up on anyone else, and neural upscaling seems just *crazy* enough that some streaming platform might implement it as an attempted competitive edge.

To be fair, I don’t think it’s a great idea. But it is *an* idea.

“A wrong decision is better than indecision.” - Tony Soprano

30% Odds.

Bye for now. I’ll see you again in Part 2 shortly.

I think the neural upscalers should be brought upto 70-80%

Gamers adopted upscalers like FSR and DLSS pretty fast so there is consumer precedent for this. I think as long as they are implemented well and don't look TOO bad, it'll probably make bank for whoever invests/makes it.

Also love the ARM tutorials, helped me alot!

Incredible predictions.Very spot on in 2026.