2025 Predictions Thread (Part 2)

My series on 2025 Computer Science predictions is divided into two parts, this is Part 2.

Part 1 is available here:

For a more generalized video overview, you can watch my rambling here.

Table of Contents (Part 2)

1. Memory Safety

2. Generative AI

3. Supply Chain / SBOMs

4. Programming Languages

5. Conclusion.

Memory Safety

Prediction:

At least 70% of merged pull requests for the top 500 GitHub repositories with “embedded” or “operating-system” tags will be in memory-unsafe languages for 2025.

Justification:

Writing this section has made me realize the vagueness of my original prediction in the YouTube video.

Turns out, writing falsifiable developer metrics is pretty hard.

Github publishes an annual report (“The Octoverse”) as a sort of state-of-the-union address for the world of open source. It also completely exposed my bias towards backend development.

I kind of forgot how popular front-end web frameworks are.

Github isn’t the only game in town. StackOverflow publishes an annual developer survey, with similar, albeit somewhat different results for 2024 language popularity.

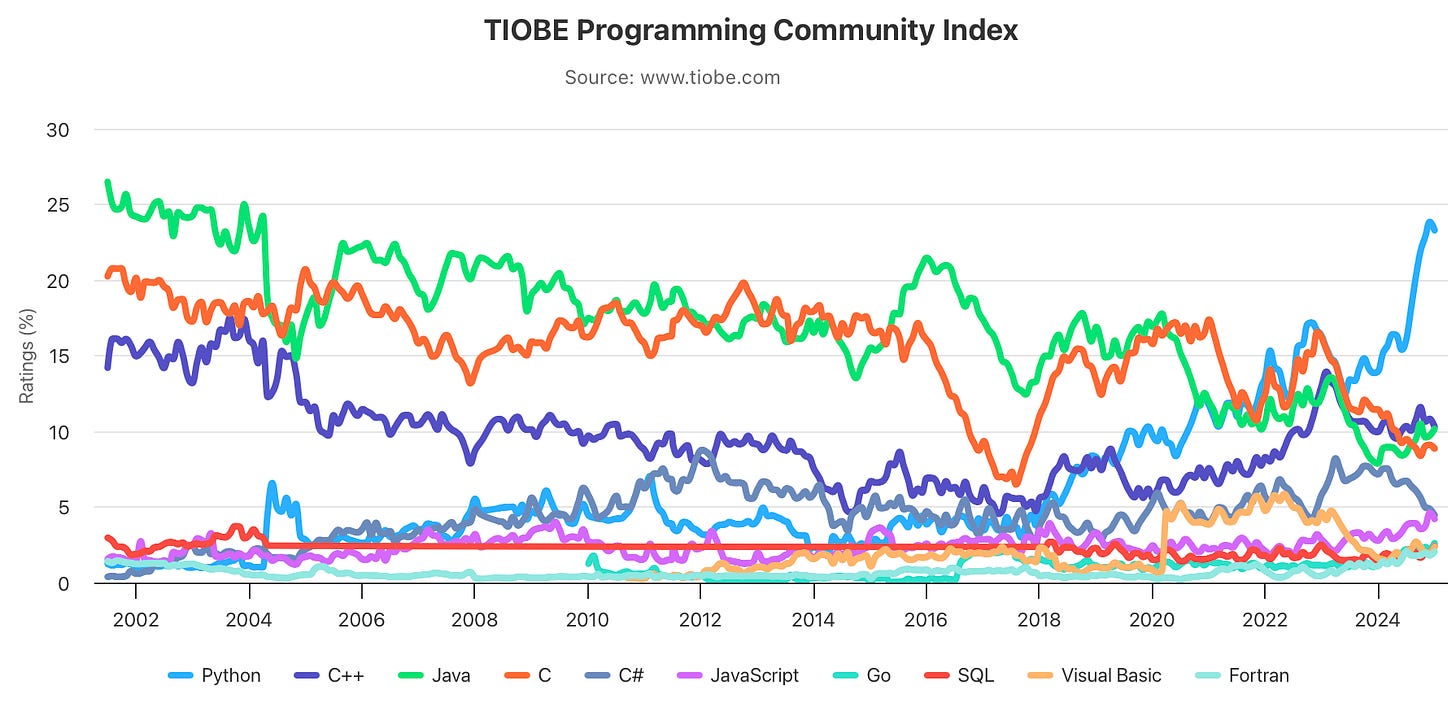

TIOBE, a software group based in The Netherlands, takes a somewhat unique approach to measuring language use with their TIOBE Index.

In any case, it’s all a matter of how you wish to slice the pie. Explicit specificity is required to make a falsifiable prediction in this case; vague interpretations are too easy to game.

Github may, on first glance, *feel* exhaustive, but what % of repositories are little more than forked hello-worlds or homework problems?

A better way to measure the adoption of memory-safe languages is to narrow our search to critical pieces of code that:

1. Have high consequences for failure

2. Widely used across many industries

3. May involve safety-critical systems or other human factors

Hence my prediction focusing on the “operating-system” and “embedded” tags.

If anything, you might expect those requirements induce a bias towards the adoption of memory-safe languages. After all, the consequences of memory issues in this space are proportionally enormous compared to say, a web framework!

That being said, I remain bearish on overall adoption.

The sheer backlog of legacy code written in unsafe languages like C and C++ is so massive that I still expect the majority of PRs to be written with them (using the constraints I mentioned earlier).

DARPA proposed the “TRACTOR” (Translate All C TO Rust) program mid-2024 to some fanfare, but the size of the contract is much too small to make a major difference in the industry.

($25k for the grand prize in the first phase, intended for undergrad students, but still)

The fact is, translation of code costs money. A lot of money. Even with massive aid from LLMs, you still enter the category of “why fix what isn’t broken”. It becomes even worse with mission-critical or safety-critical systems, where a more accurate statement might be “why re-certify what isn’t broken”.

Washington doesn’t seem sold on the prospect either. Although a directive was posted in early 2024 urging the use of memory-safe languages for goverment contractors, the current administration appears lukewarm.

As posted in my Part 1 Thread:

The current executive administration unexpectedly pulled the previous directive regarding memory-safe languages in a federal context, which I am now factoring into my odds. The White House previously published this on their site, which is now gone.

Obviously, the whole world doesn’t revolve around US directives, but it’s an interesting signal about the state of memory-safe language adoption.

70% Odds.

Generative AI

Prediction:

80% odds a major AAA studio releases a videogame using an LLM for NPC interaction enhancement before the end of 2025.

Justification:

To clarify, when I use the word “LLM” in this context, I am referring to actual output generated on the fly in either a cloud server (or less likely a local model) for dialog generation. I do not mean pre-scripted dialogue hardcoded in the game that may have originally been LLM generated (which would also be difficult to verify, and I suspect is already happening).

This topic is going to ruffle some feathers, and I suspect many of you will think my odds are too high / misaligned.

Before you bring out the pitchforks, let’s look at the current evidence.

Ubisoft:

Demo’d NEO NPCs at GDC 2024. LLM responses + curated backstories from writers. Currently in R&D.

Electronic Arts:

CEO Andrew Wilson stated in May 2024 “a real hunger” to adopt generative AI “as quickly as possible”.

Take-Two (Rockstar):

CEO Strauss Zelnick in late 2023 argued “[with generative AI] you could imagine all the NPCs becoming really interesting and fun.”

Nvidia:

Nvidia’s Avatar Cloud Engine, or ACE for short, is a suite of curated AI models aimed at game developers. Initially released in 2023 specifically to “bring intelligence to NPCs through AI-powered natural language interactions.”

Like it or not, I think we are going to see LLM-enhanced NPCs very soon; even half baked.

There’s going to be a large first-mover advantage to whichever AAA studio releases it first. Even if there are major issues (jailbreaks, inappropriate responses, etc), the flurry of press coverage is likely to increase sales.

As they say, all press is good press.

None of this is new; the modding community has been experimenting with this for years.

Nintendo seems to be the one standout so far, explicitly denouncing AI use.

80% odds.

Generative AI (cont)

Prediction:

60% probability a major traditional stock music platform will release an AI music generator / composer to generate custom, copyright-free tracks on the fly before the end of 2025.

Justification:

Alright, I admit. This “prediction” is also a personal wish of mine.

Do you know how hard it is to find non-crap copyright-free music tracks for background audio? It’s quite trivial to exhaust the world’s supply of listenable tracks without much effort!

With the amount of YouTube videos I’ve released on my channel, I’ve easily gone through 50+ albums worth of content, just as background noise. There isn’t *that* much music out there.

Believe it or not, lo-fi hiphop girl isn’t infinite.

Many background music “genres” (if you can call them that) are very simplistic. If there is a type of music that generative AI should be able to disrupt, it should be this one.

I’m not looking for siren songs here. Just a little lo-fi will do.

Time for a reality check.

Envato, Adobe, Artlist, and Soundstripe are the big players in this space. Thankfully, the industry seems large enough where a little R&D should pay dividends.

Coherent Market Insights estimated the stock music market size in 2020 as $964 Million, with a projection of hitting $2 Billion in 2028.

Apple quietly acquired a UK startup named “AI Music” (how original) in 2022, though it doesn’t seem like they’ve done much with it.

Soundstripe, which is quite popular with Twitch streamers, released an AI song editor last year, but that’s for modification / extension of existing tracks, not creating new ones.

The elephant in the room is, of course, Suno, which offers full AI generated music capabilities. It’s not exactly suited for streamers or editors though.

What I’m really looking for is *endless* music, not hand-creation of individual tracks.

Frankly, I might be over-optimistic here. Existing stock music companies are, for lack of a better word, kinda boomer. They literally thrive off of commercial license rights, so I doubt many will have the foresight to invest in technologies that threaten their entire business model.

Hence, I’ve lowered my odds compared to my original prediction in the YouTube video. Watch this space though, it’s ripe for disruption by a startup.

60% Odds.

Supply Chain / SBOMs

Prediction:

90% odds at least 3 Major Fortune 500 Companies will publicly announce SBOMs (Software Bill of Materials) as being a mandatory, contractual requirement and deliverable for their third party vendors.

Justification:

In my video, I mentioned SBOMs as a way to (somewhat) mitigate the risks of supply chain attacks. At the very least, it accelerates forensic analysis and mitigation when such an attack occurs.

The Director of CISA agrees.

Log4j spurred a flurry of activity in the tech space, much of which I experienced first-hand during my tenure at Microsoft.

In an effort to build public trust, I expect many Fortune 500 Companies to establish strict SBOM requirements as part of their contracts. Boeing changed their supplier agreement to require SBOMs as a deliverable recently, in November of 2024.

I suspect that high-risk industries (defense, healthcare, finance, aviation) will be the first to adopt such practices. In fact, if you’re building a medical device, the FDA now requires a SBOM to get FDA-approval as of October 1st, 2023.

So why hasn’t everyone adopted SBOMs yet?

Are they just not making public announcements? Keeping it internal?

Maybe. But not likely.

The real reason, as always, comes down to money. Many of us don’t like to admit it, but at the end of the day, most software security is a cost center, not revenue generating.

Improvements in software security practices are often the direct result of a response to a previous attack. No sense in wasting money being ahead of the curve if shareholders don’t care.

But when something big happens, they care. A lot.

It will probably take another large, supply-chain related incident to get the next wave of Fortune 500 companies announcing SBOM adoption as a mitigation.

Unfortunately, I’d say that risk is pretty likely.

90% Odds.

Programming Languages:

Prediction (2-Parter):

70% Odds that Rust will have less YoY% growth for 2025 than Zig, as determined by the number of new StackOverflow questions tagged with said language.

90% Odds that Rust will have less YoY% growth for 2025 than Zig, as determined by official subreddit size.

Justification:

I’m taking advantage of the Law of small numbers, and I’m not ashamed to say it.

Cheating can be useful if it gets my point across.

I don’t think anyone would argue that Rust wasn’t the “hype” language of the last few years. The 2024 Stack Overflow Developer survey agrees:

Alas, everything has an expiration date. In the early 2010s, the general consensus was that Scala was going to “replace Java”…look where we are now. Swift hit an insane amount of momentum in the mid-2010s, only for the “wow factor” to stabilize in the 2020s. Don’t get me started on Ruby.

Currently, I think Rust is having its “Swift moment”.

Rust will probably survive better than Scala, but we’re starting to hit the boring stage. Years ago, all it took was writing a terminal emulator in Rust to hit the front page of HN. Old news.

The new kid on the block is Zig.

To be clear, this isn’t an endorsement of the language itself; language fights are silly. All programming languages are basically the same, politics is a mind-killer.

That being said, I think Zig is in a ripe spot to become the next hype language. Zig’s philosophical simplicity of “C but safer” is appealing compared to the complexity of Rust’s borrow checker.

Minimalistic languages are fun; a resurgence towards C-like languages is certainly possible. It’s not going to take over the world, but something has to occupy dev’s weekend projects. Zig may well be the rising star of 2025.

That’s going to wrap it up for me this year as far as predictions go. We’ll take a look back in a year to see how the predictions faired.

Future blogposts will be on other interesting stuff, this was a special series.

LW out.

Your predictions on programming languages had caught my eye. I happen to be developing my own thread/memory-safe programming language called "Iridium", which will serve as an alternative to other lower-level languages like Rust, C, etc. That being said, my own predictions in this space are a bit biased lol.

Ah wow, seems as if the other 3 comments are spam? ;-/

This Part 2, largely seems to reiterate your talking points in Part 1.

Maybe refined? I mostly agree with the predictions, even if I think some of them are absolutely abysmal technologies that should be abandoned.

Take LLMs for example, machine translation is intrinsically awful with some languages (e.g. Japanese and English, also see: https://www.youtube.com/watch?v=4J4id5jnEo8)

So-called "AI" has been used in the game industry for decades. I don't doubt that some game devs may use cloud based LLMs for NPCs, but it will be awful. There are reasons I gave up on the video game industry even though I also worked for The Museum of Art and Digital Entertainment which is a 501c3 nonprofit playable video game museum.

For example, Todd's Adventures in Slime World (1992, Atari Lynx, Epyx/etc.) had "music" which was "AI" generated if you could call it that. It certainly was audio, it was also, not good. Probably a great way to cut a corner and save some dev costs rather than pay a musician (because everyone knows real musicians are all greedy, wait, no they aren't, though beware the "biz" music industry and publishing sorts).

Fatal Labryinth for the SEGA Genesis (1991, aka 死の迷宮) used procedurally generated dungeon/levels and the novelty wears off almost instantaneously. It's an awful game.

So, my prediction would be more like: if LLMs are used for "cloud" based NPC? They'll just continue to be awful. There are already examples I think? Twitch Streamer QueenBee was playing some "AI generated" game on stream a year or two ago. It did not seem good. I do not remember its name.

So-called "AI" has been used in video games for decades, but more often than not, as enemy logic. Take 侍魂「samurai tamashii」aka Samurai Spirits aka Samurai Shodown, its enemy "AI" is so robust, that for me at least, I found that I would have to start off the game playing "stupidly" to try to convince the "AI" to go easy on me, to ever complete the game, because each opponent gets progressively more difficult. If you show off at the start, then the difficulty ratchets up so quickly, chances of completing the game, at least to my inept reflexes was impossible.

A lot of early Neo Geo games had tutorial/training modes too.

Which reminded me of when Doug Engelbart described how J.C.R. Licklider cut off Engelbart's funding for NLS. In an interview (I have archived offline and do not see online last I checked) that Robert X. Cringely gave with Doug, Engelbart described how "Lick" (his nickname) seemed to have caught the "AI" bug and when he asked how NLS was going, and Engelbart said something to the effect of, "great! We just hired some women to teach customers how to use the system." Lick apparently responded that: "the systems should be training the users, not people."

Paraphrasing Doug further he said something to the effect of: "In decades since then, I have yet to encounter a system capable of training a novice user. But Lick seemed convinced we were missing out on some fundamental design requirement."

I do agree that I doubt most memory "unsafe" language based code will benefit from being rewritten in memory "safe" languages, but I do think it is plausible and achievable to augment existing code bases with tools to improve memory safety without rewriting them entirely. Admittedly, I don't think projects such as Safe-C and Safe C++ are really targeting compilers at the right layer to achieve desirable results (where desirable results would be something closer to something such as: update my LLVM/clang or gcc, recompile, done.)

The SBOM prediction stuff which you also addressed in part 1 I think I probably agree with too, though I am still fixated mostly on hardware level supply chain attacks and consider software the realms of dependency hells.

Cool Valentine's Day video posted today on YouTube BTW. I hope you have a lovely one; I know seeing your smiling face brightened my day a little bit, so thanks for that!

Lots of love!